The Robots.txt file navigates search engines when crawling your website which in turn creates an excellent SEO tool.

Today we will show you how to do just that.

The Robots.txt file

This file is created by site owners in order to tell search engines how to properly crawl and index pages on their website.

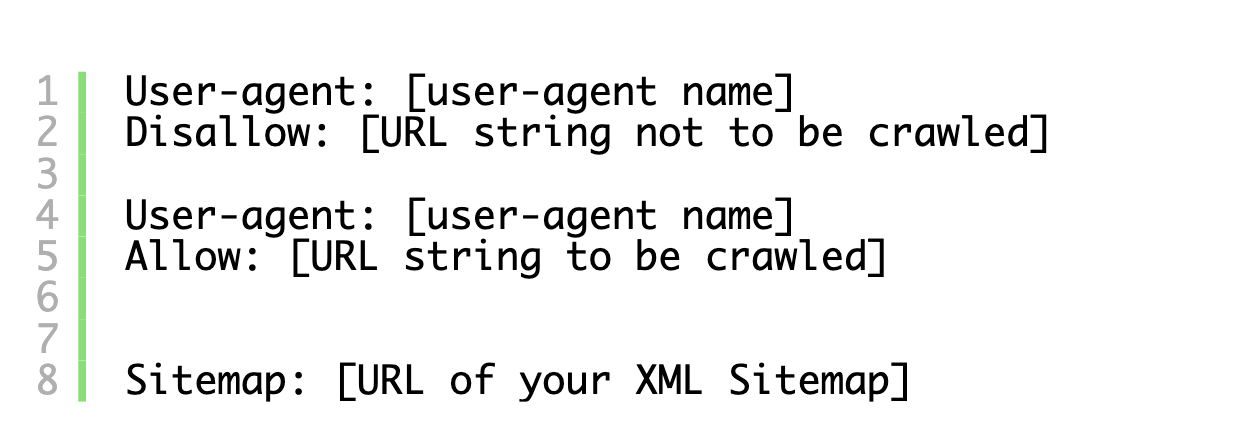

The Robots.txt file is stored in the root directory, or you might know it as the main folder. The basic format looks like this:

You might have a number of lines for instructions which allow or disallow certain URLs and add multiple sitemaps. If you do not disallow a specific URL, then in this case search engine bots will crawl it.

Here is an example:

Do you actually need a robots.txt file?

Without this file search engines will crawl and index your site. Note, that you would not have control over which folders and pages will be crawled by search engines.

This is not an issue if you’re just starting out a website with not much content. However, as your website evolves, you would need to have better control over which content is visible for search engines.

The reason is that search bots have a limited crawl quota for each website.

This means that during a crawl session they have a certain number of pages that they could crawl. If they’re not able to crawl all pages on your website, they will continue to do so on the next session. This can slow down your website indexing.

You can avoid this by disallowing search bots to crawl pages that are unnecessary, such as plugin files, WordPress admin pages, and themes folder.

This can help search engines crawl more pages which are relevant and index them faster.

The robots.txt file is also useful if you wish to stop search engines from indexing a page or post on your site. It’s probably not the best option if you wish to hide content from readers, but it will prevent some of it appearing in search results.

How does the best Robots.txt file look like?

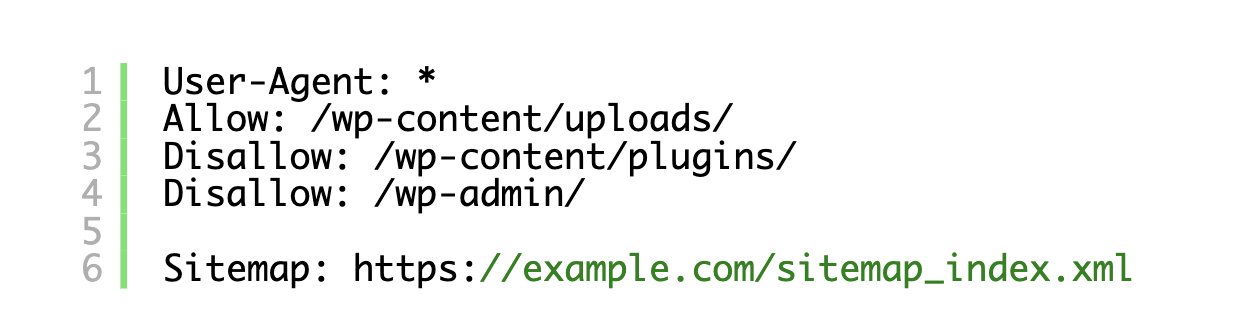

A lot of popular blogs use a very simplistic version of the file.

In the example above, the file allows the bots to index the content and gives them a link to the XML site maps.

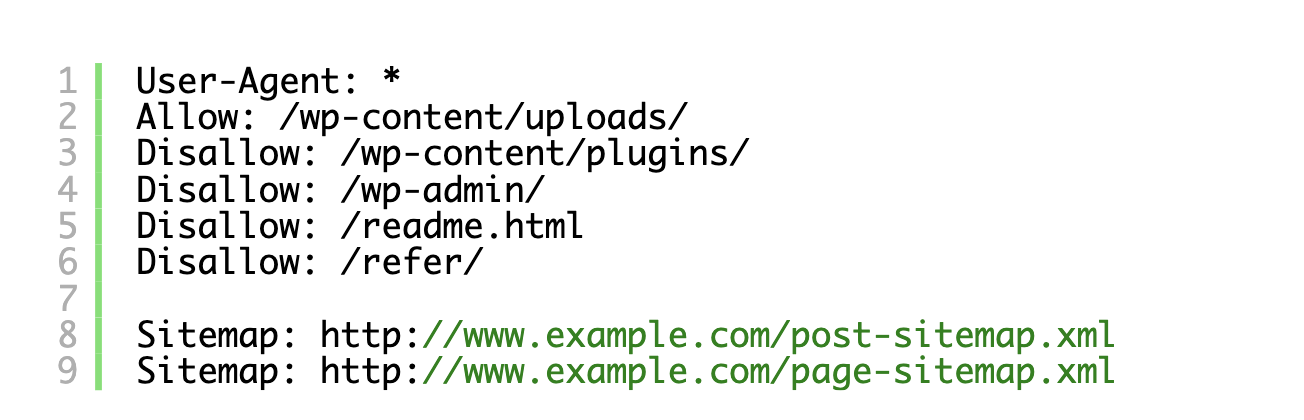

In cases of WordPress websites, it’s best to use the following rules in the file:

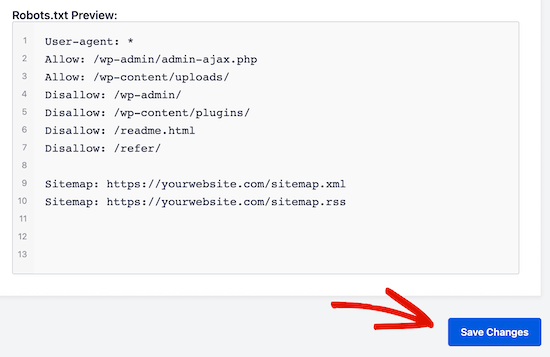

In this case search bots will index all files and images. The search bots are disallowed to index login files, readme file, admin area and affiliate links.

With the help of sitemaps, Google but can find all pages on your website.

How can you create a robots.txt file?

There are two methods you could use to create a robots.txt file.

Using the All in One SEO plugin

This is the best SEO plugin available on the market. It comes with a built-in robots.txt file generator and it’s extremely user-friendly.

After installing and activating the plugin, it’s time to create and edit the robots .txt file from the WP admin area.

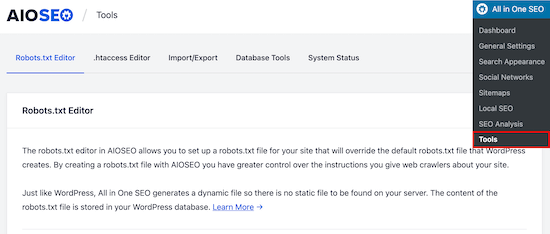

Click on All in One SEO – Tools to start editing.

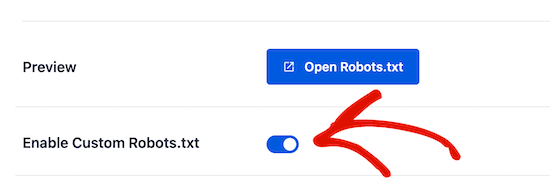

Go to Enable Custom Robots.txt toggle. You can now create your custom file in WordPress.

You can view the existing file in the Robots.txt Preview section located at the bottom of your screen. You can see the default rules which were added by WordPress.

These rules tell search engines not to crawl the WP core files, bots can index all content and have access to the link for the XML sitemaps.

You can now make custom changes to the robots.txt file.

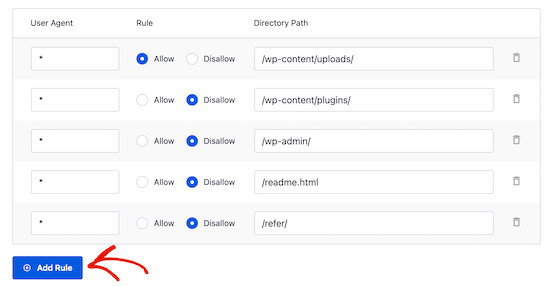

In the User Agent field, enter a user agent with a * to apply the rule for all agents.

Now, choose to Allow and Disallow the search engines to crawl.

Enter the directory path or filename on the Directory Path field.

This will automatically apply the rule to the file. With the Add rule button you can add another rule.

Your file will like this:

Use FTP to edit the robots.txt file manually

You will need an FTP client for this method.

Connect to your WP hosting account by using an FTP client.

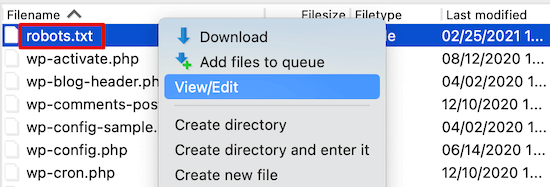

You will now see the robots.txt file in the root folder.

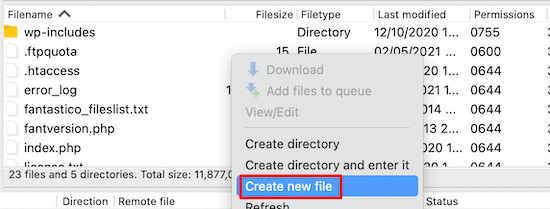

If you can’t locate it, then you probably don’t have a robots.txt file.

If this is the case, simply create one.

You can download the file to your computer with the help of any plaint text editor.

Is your robots.txt file working?

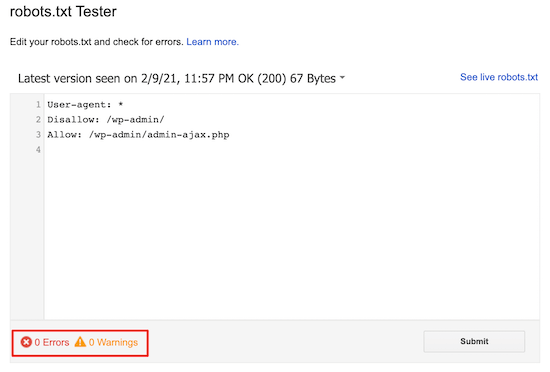

After creating the file, it’s wise to test it first.

For this example, we will be using the Google Search Console, although there are a number of tester tools available.

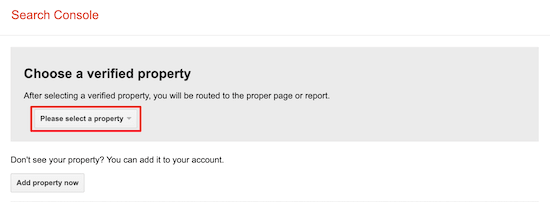

Link your website with the Google Search Console. Now, you can use the Google Search Console Robots Testing Tool.

Choose your property from the dropdown menu.

The robots.txt file will be automatically fetched by the tool and you will see the highlighted warnings and errors.

Conclusion

The idea behind properly optimizing your robots.txt file is preventing search engines from making pages you don’t want publicly available. It’s best to follow the guidelines above for creating your own robots.txt file.

We hope this post was helpful in teaching you how to optimize your WP robots.txt file!